GO-Surf: Neural Feature Grid Optimization

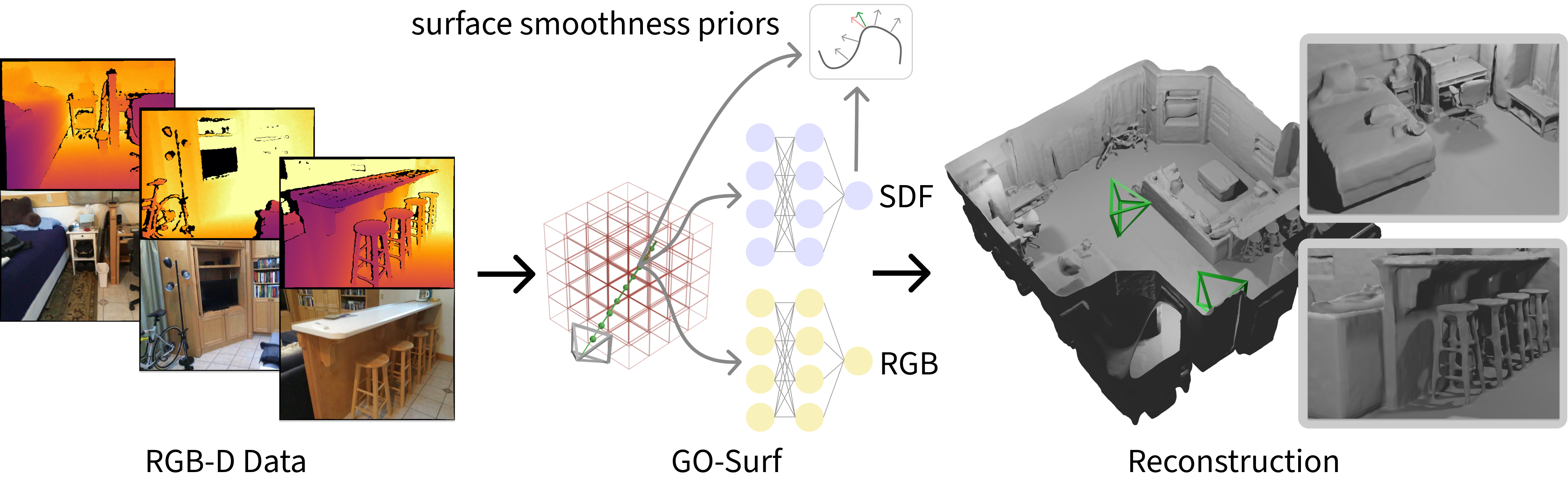

GO-Surf: Neural Feature Grid Optimization In this work we present GO-Surf, a direct feature grid optimization method for accurate and fast surface reconstruction from RGB-D sequences. We model the underlying scene with a learned hierarchical feature voxel grid that encapsulates multi-level geometric and appearance local information. Feature vectors are directly optimized such that after being tri-linearly interpolated, decoded by two shallow MLPs into signed distance and radiance values, and rendered via surface volume rendering, the discrepancy between synthesized and observed RGB/depth values is minimized. Our supervision signals --- RGB, depth and approximate SDF --- can be obtained directly from input images without any need for fusion or post-processing. We formulate a novel SDF gradient regularization term that encourages surface smoothness and hole filling while maintaining high frequency details. GO-Surf can optimize sequences of 1-2K frames in 15-45 minutes, a speedup of 60 times over NeuralRGB-D (Azinovic et al. 2022), the most related approach based on an MLP representation, while maintaining on par performance on standard benchmarks. We also show that with slightly reduced voxel resolution GO-Surf is able to run online sequential mapping at interactive framerate.

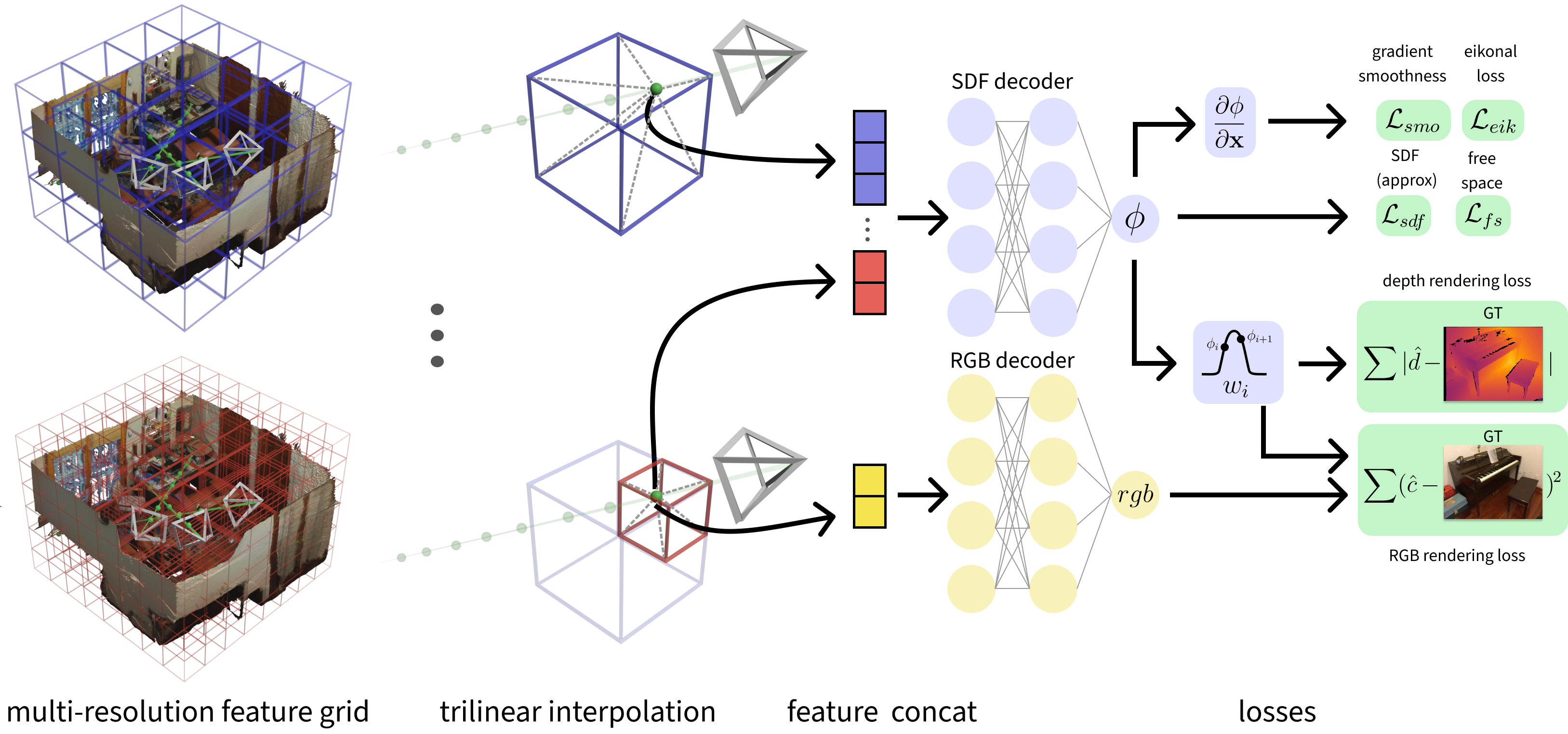

GO-Surf uses multi-level feature grids and two shallow MLP decoders. Given a sample point along a ray, each grid is queried via tri-linear interpolation. Multi-level features are concatenated and decoded into SDF, and used to compute the sample weight. Color is decoded separately from the finest grid. Loss terms are applied to SDF values, and rendered depth and color. The gradient of the SDF is calculated at each query point and used for Eikonal and smoothness regularization.

We show our reconstruction results on real-world ScanNet scenes as well as synthetic scenes, and compare with NeuralRGB-D (Azinovic et al. 2022) and the original BundleFusion (Dai et al. 2017) result. Overall GO-Surf produces more complete and smoother reconstruction results without losing details. For missing depth on areas such as TV screens our method demonstrates strong "hole-filling" ability and produces high-quality results. Our method is also able to capture thin structures such as chair leg better than our baselines.

We further demonstrate the scene completion ability of GO-Surf. As it can be seen, although both NeuralRGB-D and GO-Surf have the ability to fill in unobserved or missing-depth regions, NeuralRGBD tends to yield much noisier completion results with many holes and artefacts (such as on the floor and the wall behind the TV screen). In contrast, GO-Surf could produce smoother completion and more natural transition.

GO-Surf's fast runtime enables online sequential operation at 15 FPS (with voxel size 6cm). We run sequential mapping (reconstruction with GT camera poses) on ScanNet scene0000 and show a qualitative comparison with NICE-SLAM (Zhu et al. CVPR'22) in mapping mode (with GT camera poses). Note that GO-Surf achieves better reconstruction quality while running 30 times faster than NICE-SLAM.

We presented GO-Surf, a novel approach to surface reconstruction from a sequence of RGB-D images. We achieved a level of smoothness and hole filling on-par with MLP-based approaches while reducing the training time by an order of magnitude. Our system produces accurate and complete meshes of indoor scenes.

However, just like other voxel-based archoitecture GO-Surf also sufferes from high memory consumption which at the moment scales cubically with the scene dimensions. Voxel hashing (Niessner et al. 2013) or octree-based sparsification could significantly reduce the memory footprint of our system and we intend to explore this direction as our future work. Moreover, GO-Surf currently overfits to a single scene. Exploiting learnt priors on large datasets and using them at inference time could be another interesting future direction.