|

I am a PhD student at the CDT in Foundational AI at UCL, supervised by Prof. Lourdes Agapito and Prof. Niloy Mitra. My research interest lies in object-aware semantic SLAM and 3D reconstruction, combining object-level scene understanding and SLAM systems using learning-based approaches. Prior to my PhD I obtained master's degree in Robotics from UCL, and Bachelors degree in Electrical Engineering from University of Liverpool.

Email |

Google Scholar |

Github (1k+ stars) |

Twitter |

LinkedIn

|

|

|

|

|

|

|

|

|

My research interests include semantic SLAM, 3D Reconstruction, Neural Scene Representations and Robotics. I'm also interested in exploring the coupling point between long-term SLAM and embodied AI. |

|

Hengyi Wang, Jingwen Wang, Lourdes Agapito IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024 project page | arxiv | code We present MorpheuS, a dynamic scene reconstruction method that leverages neural implicit representations and diffusion priors for achieving 360° reconstruction of a moving object from a monocular RGB-D video. |

|

Jingwen Wang, Juan Tarrio, Lourdes Agapito, Pablo F. Alcantarilla, Alexander Vakhitov IEEE Robotics and Automation Letters (RA-L), 2023 project page | arxiv | video | video (bilibili) | code We present SeMLaPS, a real-time semantic mapping system based on 2D-3D networks that takes in a sequence of RGB-D images, outputs the semantic mapping of the scene sequentially. Our experiments show that SeMLaPS achieves state-of-the-art accuracy among real-time 2D-3D networks and shows better cross-sensor generalization capabilities than methods based on 3D-only networks. |

|

Hengyi Wang*, Jingwen Wang*, Lourdes Agapito IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 project page | arxiv | video | video (bilibili) | benchmark | code We present Co-SLAM, a neural SLAM method that perform real-time camera tracking and dense reconstruction based on a joint encoding. Experimental results show that Co-SLAM runs at 10-17Hz and achieves state-of-the-art scene reconstruction results and competitive tracking performance in various datasets and benchmarks (ScanNet, TUM, Replica, Synthetic RGBD). |

|

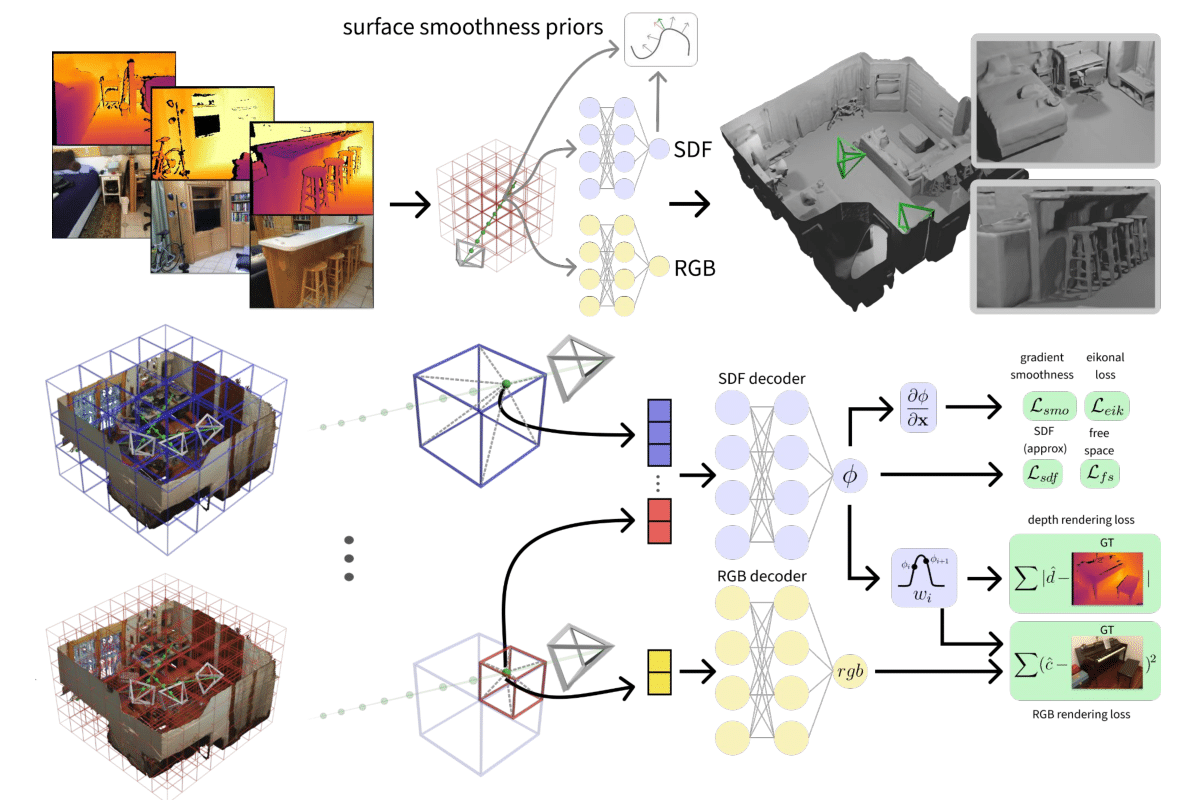

Jingwen Wang*, Tymoteusz Bleja*, Lourdes Agapito International Conference on 3D Vision (3DV), 2022 (Oral Presentation) project page | arxiv | video | video (bilibili) | code We present GO-Surf, a direct feature grid optimization method for accurate and fast surface reconstruction from RGB-D sequences. GO-Surf can optimize sequences of 1-2K frames in 15-45 minutes, a speedup of 60 times over NeuralRGB-D (Azinovic et al. 2022). We also show that with slightly reduced voxel resolution GO-Surf is able to run online mapping at interactive framerate (15FPS). |

|

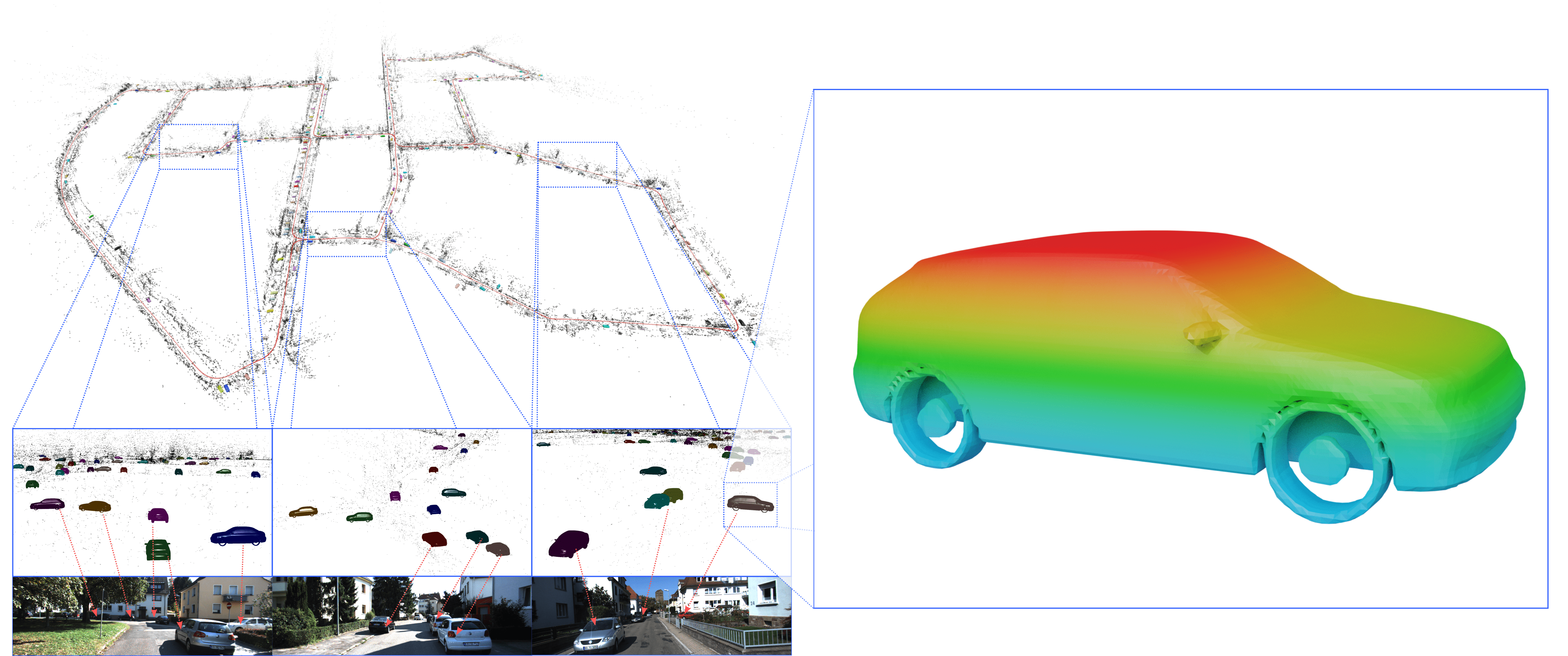

Jingwen Wang, Martin Rünz, Lourdes Agapito International Conference on 3D Vision (3DV), 2021 project page | arxiv | video | video (bilibili) | code We propose DSP-SLAM, an object-oriented SLAM system that builds a rich and accurate joint map of dense 3D models for foreground objects, and sparse landmark points to represent the background. Objects are represented as compact and optimisable codes learned from a category-specific deep shape embeddings. Camera poses, object poses and 3D feature points are jointly optimized in a factor-graph via object-aware bundle adjustment. |

|

|

|

University College London (UCL) 2021 Re-implemented the KinectFusion algorithm with Python and PyTorch. All the core functions (TSDF volume, frame-to-model tracking, point-to-plane ICP, raycasting, TSDF fusion, etc.) are implemented using pure PyTorch without any custom CUDA kernels. The system is able to run at 17Hz with a single RTX2080 GPU. Code available on GitHub. |

|

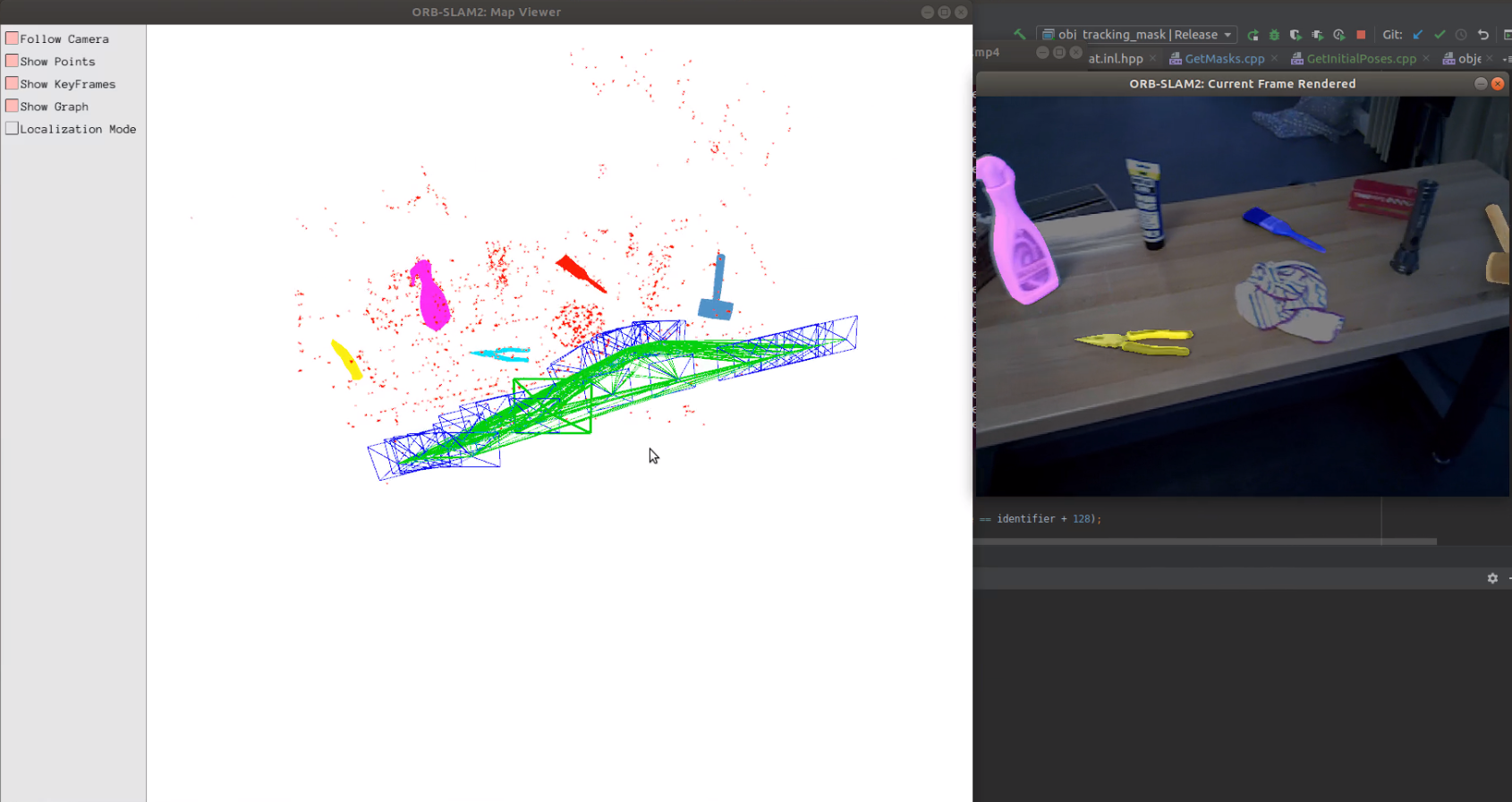

University College London (UCL) 2020 Built a SLAM system that is able to track 6-DoF poses of several objects with known 3D shapes using ICP with an RGB-D camera and reduced the tracking uncertainty by involving object poses in the joint factor-graph and iteratively optimizing object poses. This project was Part of EU Horizon 2020 Secondhands Project. |

|

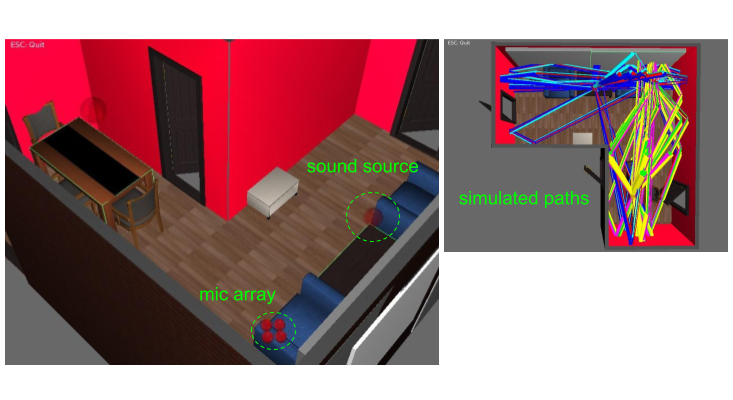

Emotech LTD 2018 Work done during an internship at Emotech. Developed a data-driven sound source Direction-of-Arrival (DOA) estimation algorithm using CNNs, and performed more realistic data augmentation using HoME-platform. |

|

|

|

Teaching Assistant: Image Processing, Autumn 2019, 2021, 2023

Teaching Assistant: Robot Vision and Navigation, Spring 2020, 2021 |

|

Conference Reviewer: ICRA (2022, 2024), IROS (2022, 2023), NeurIPS (2023), ICLR (2024)

Journal Reviewer: RA-L, IJRR |

|

|

|

Thank Dr. Jon Barron for sharing the source code of the website |